The TF3810 TwinCAT 3 Function is a high-performance execution module (inference engine) for trained neural networks.

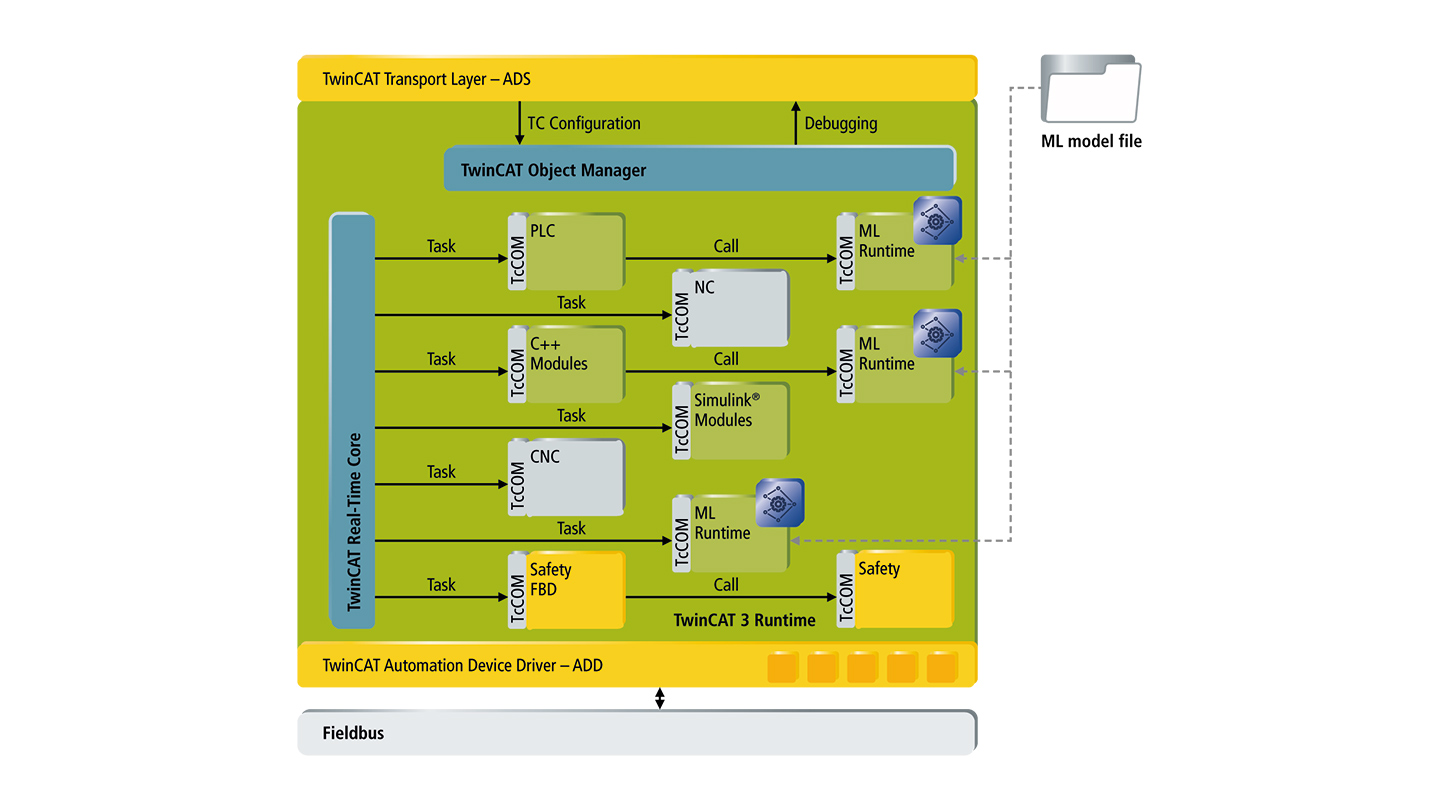

Beckhoff offers a machine learning (ML) solution that is seamlessly integrated into TwinCAT 3. This ensures ML applications can also benefit from the familiar advantages of system openness found in PC-based control thanks to the use of established standards As an added bonus, the neural networks are executed in real time, providing machine builders with the ideal foundations for improving machine performance.

The neural networks are trained in a variety of frameworks established for data scientists, such as SciKit-Learn, PyTorch, and TensorFlow, as well as in the TE3850 TwinCAT 3 Machine Learning Creator, which is optimized for automation engineers. In each case, the AI model created is exported from the learning environment as an ONNX file. ONNX (Open Neural Network Exchange) has asserted itself as an open standard for interoperability in machine learning, ensuring a clear distinction between the learning environment and execution environment of trained models.

The ONNX file can be read into TwinCAT 3 and supplemented with application-specific meta information, such as the model name, model version, and a brief description. The TwinCAT 3 PLC provides a function block that loads the AI model description file and executes it on a cycle-synchronous basis. These loading and execution processes are implemented as methods of the function block, which ensures the AI model is deeply integrated into the machine application.

Neural networks are used in various fields – including tabular data, time series, and even image data. They are used to handle both classification and regression tasks, which opens up a huge range of applications, such as residual lifetime prediction, sensor fusion / virtual sensor technology, product quality prediction, and automatic machine parameter tuning.

Product status:

regular delivery

Product information

| Technical data | TF3810 |

|---|---|

| Required license | TC1000 |

| Includes | TF3800, TF7800, TF7810 |

| Operating system | Windows 7, Windows 10, TwinCAT/BSD |

| CPU architecture | x64 |

| Ordering information | |

|---|---|

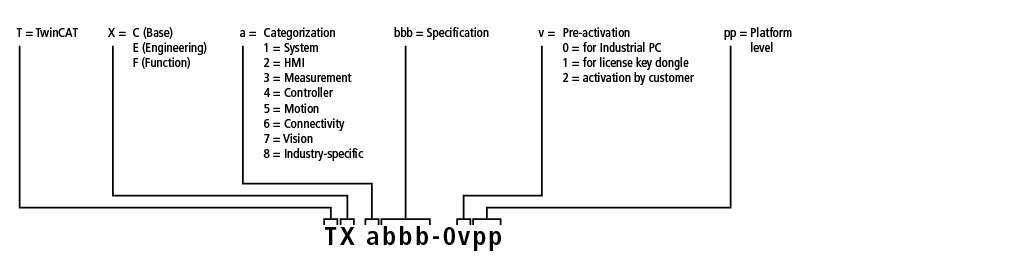

| TF3810-0v40 | TwinCAT 3 Neural Network Inference Engine, platform level 40 (Performance) |

| TF3810-0v50 | TwinCAT 3 Neural Network Inference Engine, platform level 50 (Performance Plus) |

| TF3810-0v60 | TwinCAT 3 Neural Network Inference Engine, platform level 60 (Mid Performance) |

| TF3810-0v70 | TwinCAT 3 Neural Network Inference Engine, platform level 70 (High Performance) |

| TF3810-0v80 | TwinCAT 3 Neural Network Inference Engine, platform level 80 (Very High Performance) |

| TF3810-0v81 | TwinCAT 3 Neural Network Inference Engine, platform level 81 (Very High Performance) |

| TF3810-0v82 | TwinCAT 3 Neural Network Inference Engine, platform level 82 (Very High Performance) |

| TF3810-0v83 | TwinCAT 3 Neural Network Inference Engine, platform level 83 (Very High Performance) |

| TF3810-0v84 | TwinCAT 3 Neural Network Inference Engine, platform level 84 (Very High Performance) |

| TF3810-0v90 | TwinCAT 3 Neural Network Inference Engine, platform level 90 (Other) |

| TF3810-0v91 | TwinCAT 3 Neural Network Inference Engine, platform level 91 (Other 5…8 Cores) |

| TF3810-0v92 | TwinCAT 3 Neural Network Inference Engine, platform level 92 (Other 9…16 Cores) |

| TF3810-0v93 | TwinCAT 3 Neural Network Inference Engine, platform level 93 (Other 17…32 Cores) |

| TF3810-0v94 | TwinCAT 3 Neural Network Inference Engine, platform level 94 (Other 33…64 Cores) |

We recommend using a TwinCAT 3 license dongle for platform levels 90-94.

Loading content ...

Loading content ...

Loading content ...

Loading content ...

© Beckhoff Automation 2024 - Terms of Use